The proposed system consists of 3 modules – Object detection, Object classification and Industrial robot control in a dynamical environment.

AI based stereo vision system for industrial process automation which contains all three of described parts can be seen in the following video

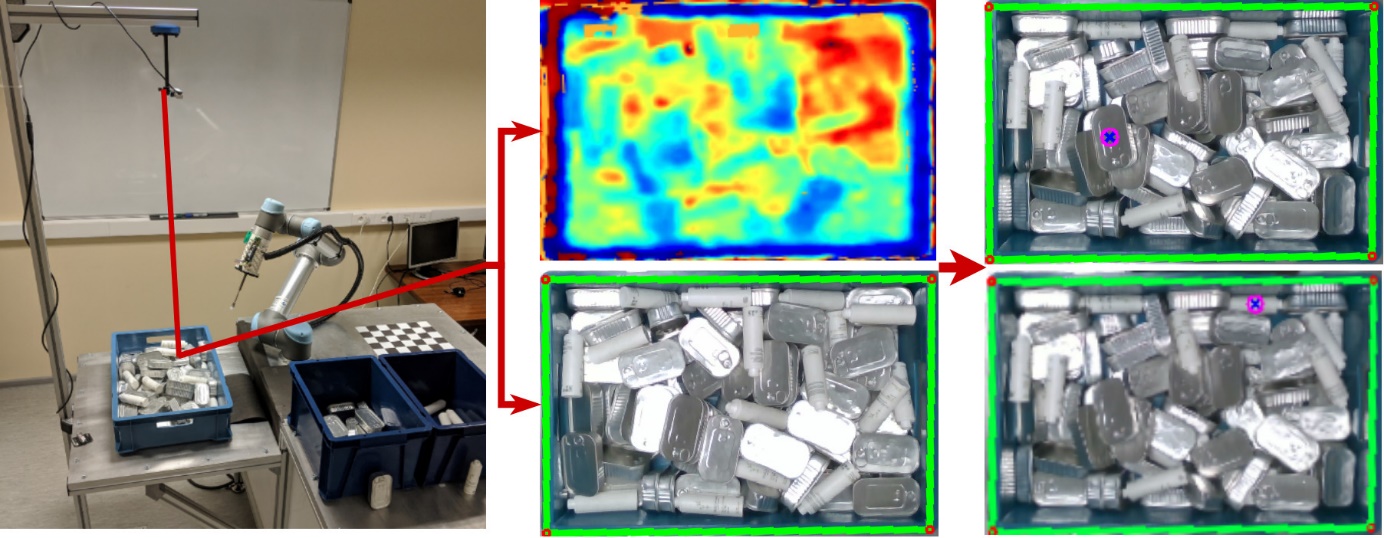

The object detection module is used to perceive the changing environment and modify systems actions accordingly. The object detection module receives colour frames and depth information from a camera sensor and returns information about objects to the robot control. The camera sensor can be placed above the pile of objects as well as at the end-effector of the robot manipulator. The object detection part is mainly responsible for object detection from the bin that can be picked by an industrial robot.

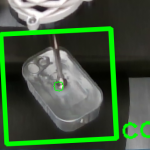

Object detection can be done by using two different detection methods. The first method uses standard computer vision algorithms where functionality is partly achieved by the OpenCV library. The second method uses CNN-based YOLO computer vision algorithms. YOLO is trained on synthetically generated data sets which are generated by EDI. Currently, YOLO training is done on the HPC server in approximately 2 days, the trained model can be used on standard desktop PC. YOLO in one frame detects all the objects that have been trained to the model and then chooses the pickable object by the highest confidence rating, then in the same frame object pick position and name are given. The YOLO has been tested with simple shape objects (bottle, can), but it can be trained and adjusted for more complex shape objects.

Object classification

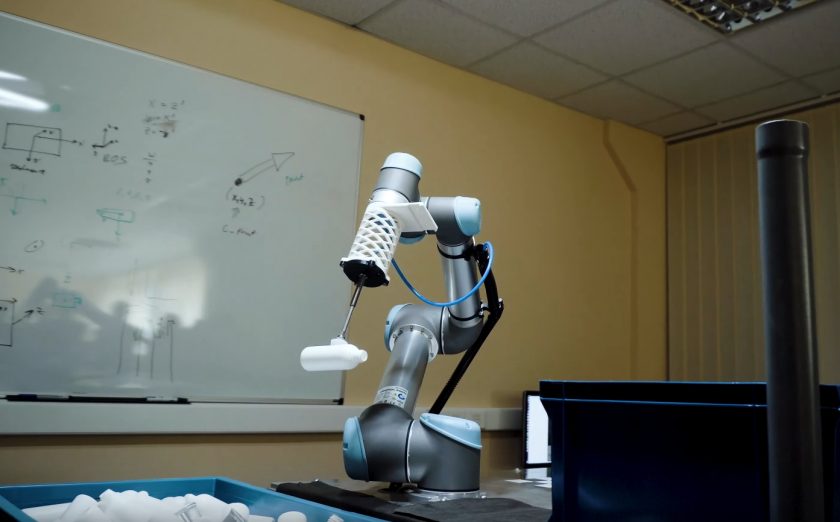

A deep convolutional neural network (CNN) is used to classify and sort objects. When industrial robot picks the object, it is then classified using Convolutional Neural Network. This technology allows for sorting different types of objects, for example as can be seen in figures below where two different kinds of objects are being sorted.

To train the classifier to recognize new classes of objects, new training datasets must be provided. Training can be done on standard desktop PC, to ensure precision up to 99% training model requires at least 1000 images of the object. The maximum amount of the different object classes is not specified, the system has been tested with 7 different types of classes.

Robot control in dynamic environment

Robot control in a dynamic environment is mainly responsible for movement and trajectory creation depending on object position in the container, object class or other information from sensors. In the selected demonstration example for picking and placing of arbitrary arranged different objects which can be seen in the following picture, robot movements are created dynamically, regarding object position in the container. Object position in the container is not predefined therefore trajectories are generated after object localization and cannot be preprogrammed. The dynamic environment changes are taken into account to avoid collisions while executing movements. In this case, the robot mainly needs to avoid collisions with the box which contains objects.

Contact information:

Janis Arents